Chapter 1. Introduction 🔗

Digital Cinema Initiatives, LLC (DCI) is a joint venture of Disney, Fox, Paramount, Sony Pictures Entertainment, Universal, and Warner Bros. Studios. The primary purpose of DCI is to establish uniform specifications for d-cinema. These DCI member companies believe that d-cinema will provide real benefits to theater audiences, theater owners, filmmakers and distributors. DCI was created with the recognition that these benefits could not be fully realized without industry-wide specifications. All parties involved in d-cinema must be confident that their products and services are interoperable and compatible with the products and services of all industry participants. The DCI member companies further believe that d-cinema exhibition will significantly improve the movie-going experience for the public.

Digital cinema is today being used worldwide to show feature motion pictures to thousands of audiences daily, at a level of quality commensurate with (or better than) that of 35mm film release prints. Many of these systems are informed by the Digital Cinema System Specification, Version 1.0, published by DCI in 2005. In areas of image and sound encoding, transport security and network services, today's systems offer practical interoperability and an excellent movie-going experience. These systems were designed, however, using de-facto industry practices.

With the publication of the Digital Cinema System Specification [DCI-DCSS] , and the publication of required standards from SMPTE, ISO, and other bodies, it is possible to design and build d-cinema equipment that meets all DCI requirements. Manufacturers preparing new designs, and theaters planning expensive upgrades are both grappling with the same question: how to know if a d-cinema system is compliant with DCI requirements?

Note: This test plan references standards from SMPTE, ISO, and other bodies that have specific publication dates. The specific version of the referenced document to be used in conjunction with this test plan shall be those listed in Appendix F .

1.1. Overview 🔗

This Compliance Test Plan (CTP) was developed by DCI to provide uniform testing procedures for d-cinema equipment. The CTP details testing procedures, reference files, design evaluation methods, and directed test sequences for content packages and specific types of equipment. These instructions will guide the Test Operator through the testing process and the creation of a standard DCI compliance evaluation report.

This document is presented in three parts and eight appendices.

-

Part

I.

Procedural

Tests

—

contains

a

library

of

test

procedures

for

elements

of

a

d-cinema

system.

Many

of

the

test

procedures

are

applicable

to

more

than

one

element.

The

procedure

library

will

be

used

in

Part

III.

Consolidated

Test

Procedures

to

produce

complete

sequences

for

testing

content

and

specific

types

of

systems.

- Chapter 2. Digital Cinema Certificates — describes test objectives and procedures to test d-cinema certificates and devices which use d-cinema certificates for security operations.

- Chapter 3. Key Delivery Messages — describes test objectives and procedures to test Key Delivery Messages (KDM) and devices which decrypt KDM payloads.

- Chapter 4. Digital Cinema Packaging — describes test objectives and procedures to test the files in a Digital Cinema Package (DCP).

- Chapter 5. Common Security Features — describes test objectives and procedures to test security requirements that apply to more than one type of d-cinema device ( e.g. , an SMS or a projector). Security event logging is also addressed in this chapter.

- Chapter 6. Media Block — describes test objectives and procedures to test that Media Block device operations are correct and valid.

- Chapter 7. Projector — describes test objectives and procedures to test that projector operations are correct and valid.

- Chapter 8. Screen Management System — describes test objectives and procedures to test that Screen Management System (SMS) operations are correct and valid.

-

Part

II.

Design

Evaluation

Guidelines

—

contains

two

chapters

that

describe

DCI

security

requirements

for

the

design

and

implementation

of

d-cinema

equipment,

and

methods

for

verifying

those

requirements

through

document

analysis.

Requirements

in

this

part

of

the

CTP

cannot

easily

be

tested

by

normal

system

operation.

FIPS

140

requirements

for

deriving

random

numbers,

for

example,

must

be

verified

by

examining

the

documentation

that

is

the

basis

of

the

FIPS

certification.

- Chapter 9. FIPS Requirements for a Type 1 SPB — provides a methodology for evaluating the results of a FIPS 140 security test. Material submitted for testing and the resulting reports are examined for compliance with [DCI-DCSS] requirements.

- Chapter 10. DCI Requirements Review — provides a methodology for evaluating system documentation to determine whether system aspects that cannot be tested by direct procedural method are compliant with [DCI-DCSS] requirements.

-

Part

III.

Consolidated

Test

Procedures

—

contains

consolidated

test

sequences

for

testing

d-cinema

equipment

and

content.

- Chapter 11. Testing Overview — Provides an overview of the consolidated testing and test reports and a standard form for reporting details of the testing environment.

- Chapter 13. Digital Cinema Server Consolidated Test Sequence — A directed test sequence for testing a stand-alone Digital Cinema Server comprising a Media Block (MB) and a Screen Management Server (SMS).

- Chapter 14. Digital Cinema Projector Consolidated Test Sequence — A directed test sequence for testing a stand-alone Digital Cinema Projector with Link Decryptor Block (LDB).

- Chapter 15. Digital Cinema Projector with MB Consolidated Test Sequence — A directed test sequence for testing a Digital Cinema Projector having an integrated IMB and an integrated or external SMS.

- Chapter 16. Link Decryptor/Encryptor Consolidated Test Sequence — A directed test sequence for testing an image processing device which is a remote SPB Type 1 with both Link Encryptor and Link Decryptor capabilities.

- Chapter 17. Digital Cinema Server Consolidated Confidence Sequence — A confidence test sequence for a stand-alone Digital Cinema Server, based on an existing CTP compliance test performed according to Chapter 13. Digital Cinema Server Consolidated Test Sequence .

- Chapter 18. Digital Cinema Projector Consolidated Confidence Sequence — A confidence test sequence for a stand-alone Digital Cinema Projector, based on an existing CTP compliance test performed according to Chapter 14. Digital Cinema Projector Consolidated Test Sequence .

- Chapter 19. Digital Cinema Projector with MB Consolidated Confidence Sequence — A confidence test sequence for a Digital Cinema Projector with MB, based on an existing CTP compliance test performed according to Chapter 15. Digital Cinema Projector with MB Consolidated Test Sequence .

- Chapter 20. OMB Consolidated Test Sequence A directed test sequence for testing an OMB.

- Chapter 21. Digital Cinema Projector with IMBO Consolidated Test Sequence A directed test sequence for testing a Digital Cinema Projector with IMBO.

- Chapter 22. OMB Consolidated Confidence Sequence — A confidence test sequence for an OMB, assuming the existence of an original CTP compliance test performed according to Chapter 20. OMB Consolidated Test Sequence .

- Chapter 23. Digital Cinema Projector with IMBO Consolidated Confidence Sequence — A confidence test sequence for a Digital Cinema Projector with IMBO, assuming the existence of an original CTP compliance test performed according to Chapter 21. Digital Cinema Projector with IMBO Consolidated Test Sequence .

- Appendix A. Test Materials — Provides a complete description of all reference files used in the test procedures including Digital Cinema Packages, KDMs and Certificates.

- Appendix B. Equipment List — Provides a list of test equipment and software used to perform the test procedures. The list is not exclusive and in fact contains many generic entries intended to allow Testing Organizations to exercise some discretion in selecting their tools.

- Appendix C. Source Code — Provides computer programs in source code form. These programs are included here because suitable alternatives were not available at the time this document was prepared.

- Appendix D. ASM Simulator — Provides documentation on asm-requester and asm-responder , two programs that simulate the behavior of devices that send and receive Auditorium Security Messages.

- Appendix E. GPIO Test Fixture — Provides a schematic for a GPIO test fixture.

- Appendix F. Reference Documents — Provides a complete list of the documents referenced by the test procedures and design requirements.

- Appendix G. Digital Cinema System Specification References to CTP — Provides a cross reference of [DCI-DCSS] sections to the respective CTP sections.

- Appendix H. Abbreviations — Provides explanations of the abbreviations used in this document.

1.2. Normative References 🔗

The procedures in this document are substantially traceable to the many normative references cited throughout. In some cases, DCI have chosen to express a constraint or required behavior directly in this document. In these cases it will not be possible to trace the requirement directly to an external document. Nonetheless, the requirement is made normative for the purpose of DCI compliance testing by its appearance in this document.

1.3. Audience 🔗

This document is written to inform readers from many segments of the motion picture industry, including manufacturers, content producers, distributors, and exhibitors. Readers will have specific needs of this text and the following descriptions will help identify the parts that will be most useful to them. Generally though, the reader should have technical experience with d-cinema systems and access to the required specifications. Some experience with general operating system concepts and installation of source code software will be required to run many of the procedures.

- Equipment Manufacturers

- To successfully pass a compliance test, manufacturers must be aware of all requirements and test procedures. In addition to understanding the relevant test sequence and being prepared to provide the Test Operator with information required to complete the tests in the sequence, the manufacturer is also responsible for preparing the documentation called for in Part II. Design Evaluation Guidelines .

- Testing Organizations and Test Operators

- The Testing Organizations and Test Operators are responsible for assembling a complete test laboratory with all required tools and for guiding the manufacturer through the process of compliance testing. Like the manufacturer, Testing Organizations and Test Operators must be aware of all requirements and test procedures at a very high level of detail.

- System Integrators

- Integrators will need to understand the reports issued by Testing Organizations. Comparing systems using reported results will be more accurate if the analyst understands the manner in which individual measurements are made.

1.4. Conventions and Practices 🔗

1.4.1. Typographical Conventions 🔗

This document uses the following typographical conventions to convey information in its proper context.

A Bold Face style is used to display the names of commands to be run on a computer system.

A

Fixed

Width

font

is

used

to

express

literal

data

such

as

string

values

or

element

names

for

XML

documents,

or

command-line

arguments

and

output.

Examples

that

illustrate

command

input

and

output

are

displayed

in

a

Fixed

Width

font

on

a

shaded

background:

$ echo "Hello, World!"

Hello,

World!

1

Less-than

(

<

)

and

greater-than

(

>

)

symbols

are

used

to

illustrate

generalized

input

values

in

command-line

examples.

They

are

placed

around

the

generalized

input

value,

e.g.

,

<input-value>

.

These

symbols

are

also

used

to

direct

command

output

in

some

command-line

examples,

and

are

also

an

integral

part

of

the

XML

file

format.

Callouts (white numerals on a black background, as in the example above) are used to provide reference points for examples that include explanations. Examples with callouts are followed by a list of descriptions explaining each callout.

Square brackets ([ and ]) are used to denote an external document reference, e.g. , [SMPTE-377-1] .

1.4.2. Documentation Format 🔗

The test procedures documented in Part I. Procedural Tests will contain the following sub-sections (except as noted)

- Objective —

- Describes what requirements or assertions are to be proven by the test.

- Procedures —

- Defines the steps to be taken to prove the requirements or assertions given in the corresponding objective.

- Material —

- Describes the material (reference files) needed to execute the test. This section may not be present, for example, when the objective can be achieved without reference files.

- Equipment —

- Describes what physical equipment and/or computer programs are needed for executing the test. The equipment list in each procedure is assumed to contain the Test Subject. If the equipment list contains one or more computer programs, the list is also assumed to contain a general purpose computer with a POSIX-like operating system ( e.g. , Linux). This section may not be present, for example, when the objective can be achieved by observation alone.

- References —

- The set of normative documents that define the requirements or assertions given in the corresponding objective.

The following language is used to identify persons and organizations by role:

- Testing Organization

- An organization which offers testing services based on this document.

- Test Operator

- A member of the Testing Organization that performs testing services.

- Testing Subject

- A device or computer file which is the subject of a test based on this document.

The following language is used for referring to individual components of the system or the system as a whole:

- Media Block and Controlling Devices

- This term refers to the combination of a Media Block (MB), Screen Management System (SMS) or Theater Management System (TMS), content storage and all cabling necessary to interconnect these devices. Depending upon actual system configuration, all of these components may exist in a single chassis or may exist in separate chassis. Some or all components may be integrated into the projector (see below).

- Projector

- The projector is the device responsible for converting the electrical signals from the Media Block to a human visible picture on screen. This includes all necessary power supplies and cabling.

- Projection System

- A complete exhibition system to perform playback of d-cinema content. This includes all cabling, power supplies, content storage devices, controlling terminals, media blocks, projection devices and sound processing devices necessary for a faithful presentation of the content.

- Theater System

- The projection system plus all the surrounding devices needed for full theater operations including theater loudspeakers and electronics (the "B-Chain"), theater automation, a theater network, and management workstations (depending upon implementation), etc.

Note: there may be additional restrictions, depending on implementation. For example, some Media Blocks may refuse to perform even the most basic operations as long as they are not attached to an SMS or Projector. For these environments, additional equipment may be required.

1.5. Digital Cinema System Architecture 🔗

The [DCI-DCSS] allows different system configurations, meaning different ways of grouping functional modules and equipment together. The following diagram shows what is considered to be a typical configuration allowed by DCI.

The left side of the diagram shows the extra-theater part, which deals with DCP and KDM generation and transport. The right side shows the intra-theater part, which shows the individual components of the projection system and how they work together. This test plan will test for proper DCP and KDM formats ( i.e. , conforming to the Digital Cinema System Specification), for proper transport of the data and for proper processing of valid and malformed DCPs and KDMs. In addition, physical system properties and performance will be tested in order to ensure that the system plays back the data as expected and implements all security measures as required by DCI.

While the above diagram shows what is considered to be a typical configuration allowed by the Digital Cinema System Specification, the [DCI-DCSS] still leaves room for different implementations, for example, some manufacturers may choose to integrate the Media Decryptor blocks into the projector, or share storage between d-cinema servers.

1.6. Strategies for Successful Testing 🔗

In order to successfully execute one of the test sequences given in Part III. Consolidated Test Procedures , the Test Operator must understand the details of many documents and must have assembled the necessary tools and equipment to execute the tests. This document provides all the necessary references to standards, tutorials and tools to orient the technical reader.

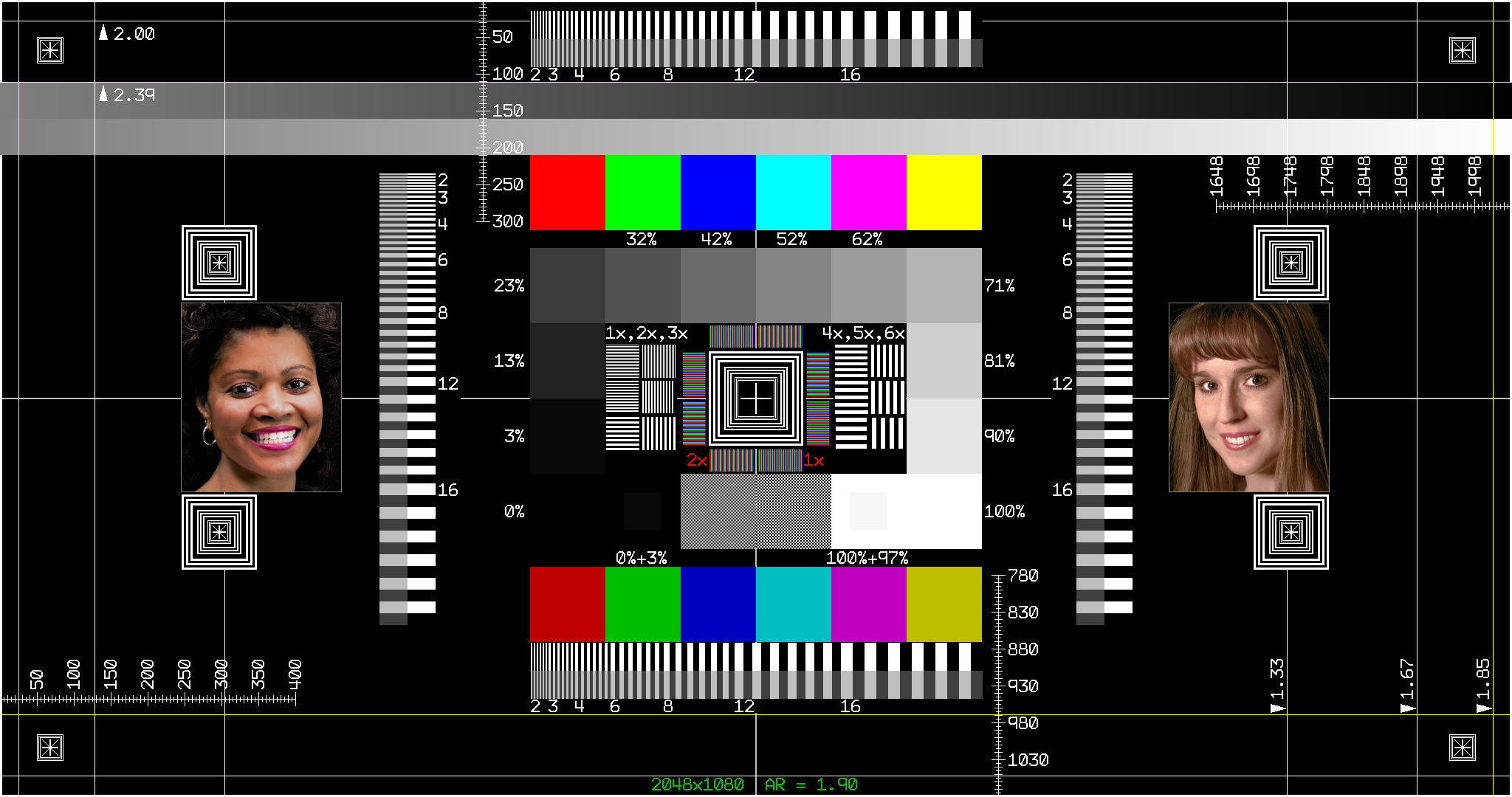

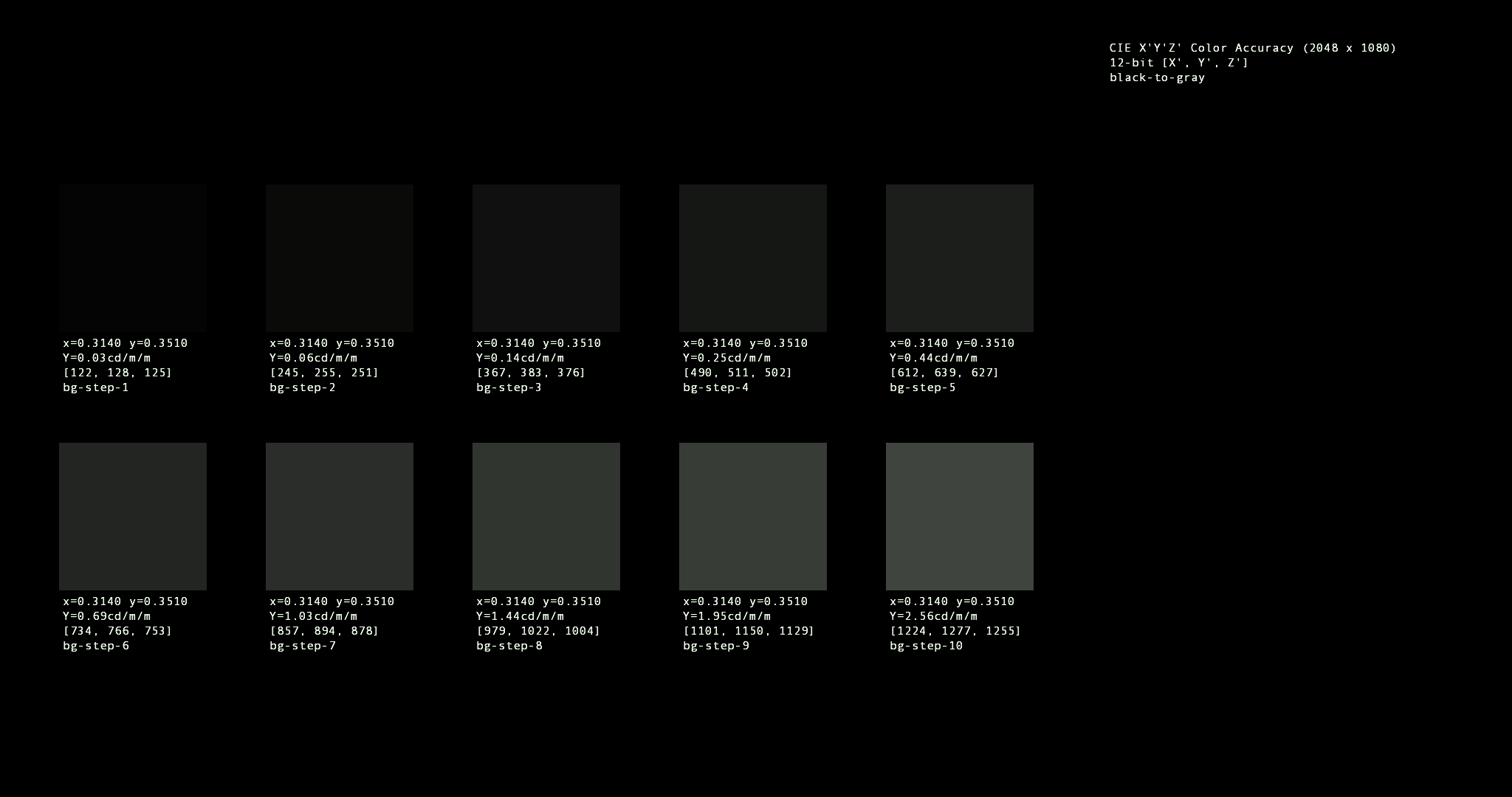

As an example, Section 7.5.12 requires a calculation to be performed on a set of measured and reference values to determine whether a projector's colorimetry is within tolerance. Section C.6 provides an implementation of this calculation, but the math behind the program and the explanation behind the math are not presented in this document. The Test Operator and system designer must read the reference documents noted in Section 7.5.12 (and any references those documents may make) in order to fully understand the process and create an accurate design or present accurate results on a test report.

Preparing a Test Subject and the required documentation requires the same level of understanding as executing the test. Organizations may even choose to practice executing the test internally in preparation for a test by a Testing Organization. The test procedures have been written to be independent of any proprietary tools. In some cases this policy has led to an inefficient procedure, but the resulting transparency provides a reference measurement that can be used to design new tools, and verify results obtained from any proprietary tools a Testing Organization may use.